Originally posted on the Student Engager blog on 3 July 2017.

A PhD often feels like an unrewarding process. There are setbacks, data failures, non-significant results, and a general lack of the small successes that (I hear) make general worklife pleasant: “I got that promotion!” “Everyone applauded my presentation!” “I moved to the desk near the window!” PhD life is one giant slog until the end, a nerve-wracking hours-long session where you’re grilled by the only people who know more about your field than you.

I survived.

Hopefully some of you have been following my research here, starting from astronauts and moving on to runners and foraging patterns. It all ties together, I promise. I recently gave a talk at the Engagers’ event “Materials & Objects” summarizing my research, which I can now tell you about in its full glory! I’m pleased to announce: I had significant findings.

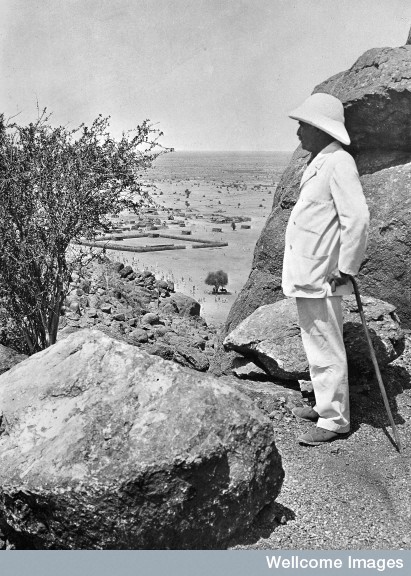

The lowdown is that (as expected) there are differences in the shape of the tibia (shin bone) between nomads and farmers in Sudan. Why would this be? Well, if you’ve been following along, bones change shape in response to activity, particularly activities performed during adolescence. The major categories of tibial shape were those that indicated long-distance walking, doing activity in one place, and doing very little activity. Looking at the distribution, the majority of the nomadic males had the leg shape indicating long-distance walking, and some of the agricultural males had the long-distance shape and others had the staying-in-place shape. This makes sense considering the varying types of activity performed in an agricultural society, particularly one that also had herds to take care of: some individuals would be taking the herds up and down along the Nile to find grazing land while others stayed local, tending farms. While it’s unclear how often a nomadic group needs to move camp to be considered truly nomadic, in this case it seems like they were walking a lot – enough to compare their tibial shape to that of modern long-distance runners. These differences in food acquisition are culturally-adapted responses to differing environments: the nomads live in semi-arid grassland and can travel slowly over a large area to graze sheep and cattle, while the farmers are constrained to a narrow strip of fertile land along the Nile banks, limiting how many people can move around, and how often.

Perhaps the most important finding is the difference between males and females. In addition to looking at shape, I also conducted tests to show how strong each bone is regardless of shape, a result called polar second moment of inertia (and shortened to, unexpectedly, J). The males at each site had higher values for J – thus, stronger bones – than the females. However, the nomadic females had higher J values than some of the males at the agricultural sites! This is in spite of most females from both sites having the tibial shape indicating “not very much activity”. This shape may be the juvenile shape of the tibia, which females have retained into adulthood despite performing enough activity to give them higher strength values than male farmers. Similar results have actually been noted in studies examining different time periods – for instance, the Paleolithic to Neolithic – and found much more similarity between females than between males. Researchers often interpret this as evidence of changing male roles but female roles remaining the same, which strikes me as unlikely considering the time spans covered. I instead conclude that females build bone differently in adolescence, and perhaps there are subtleties in bone development that don’t reveal themselves as differences in shape. As females have lower rates of testosterone, which builds bone as well as muscle, they may have to work harder or longer than males to attain the same bone shape and strength. I’m using this to argue that the roles of women in archaeological societies – particularly nomadic ones – have been unexamined in light of biological evidence.

Of course, the best conclusion for a PhD is a call for more research, and mine is that we need to examine male and female adolescent athletes together to see when exactly shape change occurs. If we can pin down the amount of activity necessary for women to have bones as strong as those of their male peers, we can more accurately interpret the types of activities ancient people were performing without devaluing the work of women.

My examiners found all this enthralling, and I’m pleased to say I passed! The work of this woman is valued in the eyes of the academe.